How to Copy Websites Locally for Fast Offline Browsing

Web browsers allow you to save particular web pages, but saving a complete website to your hard drive can be extremely time-consuming if you try to do it page-by-page.

Luckily, there are apps you can find online that allow you to easily copy a full website to your local drive, so you can browse it when you’re offline. This article will introduce you to the most popular options.

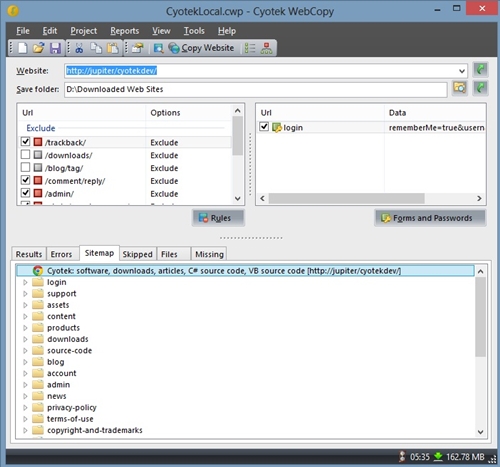

1. WebCopy by Cyotek

WebCopy is a Windows-only software that scans your website for all the linked resources. It can locate all the pages, media, images, file downloads, videos, etc. on a website and download them to your drive. For each page it discovers, it will repeat the scanning process until it locates everything on the website.

Then, you can choose if you want to download the complete website to your local drive or just pick certain segments. You can also set up different ‘projects’, which will allow you to re-download all the websites again in the future.

On the other hand, WebCopy doesn’t copy the website’s raw source code, so advanced websites may not work well while offline. However, this happens rarely, and most downloads work fine.

To copy website locally with WebCopy, you should:

- Get the app from the official website.

- Click the ‘File’ tab on the top-left side of the screen.

- Copy/paste the website URL into the ‘Website’ field.

- Adjust the ‘Save Folder’ location.

- Go to the ‘File’ tab again.

- Select the ‘Save As…’ option.

- Select ‘Copy Website’ in the toolbar. This will start the scanning and copying process.

After the process is finished, you can see all the results and errors, as well as a ‘Sitemap’ option that shows you the complete structure of the website with all the directories.

To open the website, just go to the location of your folder using File Explorer and launch ‘index.html’ with your default browser.

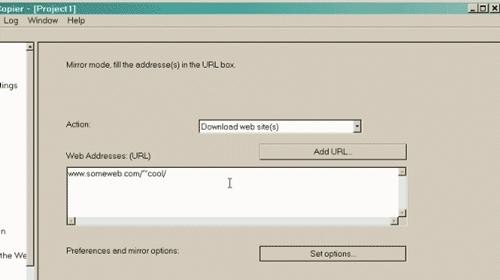

2. HTTrack

HTTrack is an extremely popular tool for downloading websites, partly because it is open-source and available for Linux, Windows, and Android.

Like WebCopy, it will scan all the pages of the website and search for links, data, and media. It will do so recursively for each new page that it encounters. You can download multiple websites and the tool will automatically organize them.

The best feature of this program is that it allows you to re-scan downloaded websites when online to update them with new content. There’s also an option to pause the download and resume it at any time.

To copy websites locally with HTTrack, you should:

- Get the tool from the official website.

- Open the app.

- Select ‘Next’ to start a new project.

- Fill in all the necessary project information.

- Select ‘Download web site(s)’ from the drop-down menu next to the ‘Action.’

- Type the URL in the URL box. You can also import a URL from a text file, or just drag-and-drop the website in the box.

- Customize all the following options – for example, the number of retries, excluded links, TimeOuts, etc.

- Hit ‘Finish’ and wait for the website to download.

When the website has downloaded, look for an ‘index.html’ file and open it with your default browser.

3. SiteSucker

SiteSucker works on a similar principle as the previous two options on the list, but it is specifically designed for Mac and iOS devices. The newest instance of the app requires MacOS 10.13 High Sierra, 10.14 Mojave, or higher. Unlike the first two, SiteSucker is a premium app and there is no free trial.

This tool has a neat user interface and it is really simple to use – you just have to copy/paste the URL into the bar and hit ‘Enter.’ The tool will copy all of the website data to your hard drive.

The best part is that you can keep and distribute the actual download file. When launched, this file will re-download the same website structure again. This is useful if you want to move the website copy to another device, and it also enables the program to pause downloads.

One of the bigger downsides of SiteSucker is that you can’t choose to only download segments of a site. Your only option is to download the full structure of the website, which means downloading plenty of useless data. When downloading huge websites, the process will take a long time.

What Websites Would You Recommend?

With these tools, you can easily save any number of websites for offline use. However, keep in mind that huge websites demand a lot of time and space to download.

It’s not convenient to download huge website portals or hubs with thousands of megabytes worth of content. On the other hand, there are many smaller websites that provide a great deal of interesting browsing potential.

Are there any smaller websites that you would recommend downloading? If so, share them in the comments below!