Preventing Site Scraping

I run a website for a client where they display a large database of information that they have gathered accurately and slowly over the years. They are finding their data across the web in various places. More than likely its due to a scraper going through their site page by page and extracting the information they need into a database of their own. And in case you’re wondering, they know it’s their data because of a single planted piece of data in each category on their site.

I’ve done a lot of research on this the past couple of days, and I can tell you that there is not a perfect catch-all solution. I have found several things to do to make accomplishing this a bit harder for them however. This is what I implemented for the client.

Ajaxified paginated data

If you have a lot of paginated data, and you are paginating your data by just appending a different number on to the end of your URL, i.e. http://www.domain.com/category/programming/2 – Then you are making the crawler’s job that much easier. First problem is, its in an easily identifiable pattern, so setting a scraper loose on these pages is easy as pie. Second problem, regardless of the URL of the subsequent pages in the category, more than likely there would be a next and previous link for them to latch on to.

By loading the paginated data through javascript without a page reload, this significantly complicates the job for a lot of scrapers out there. Google only recently itself started parsing javascript on page. There is little disadvantage to reloading the data like this. You provide a few less pages for Google to index, but, technically, paginated data should all be pointing to the root category page via canonicalization anyway. Ajaxify your paged pages of data.

Randomize template output

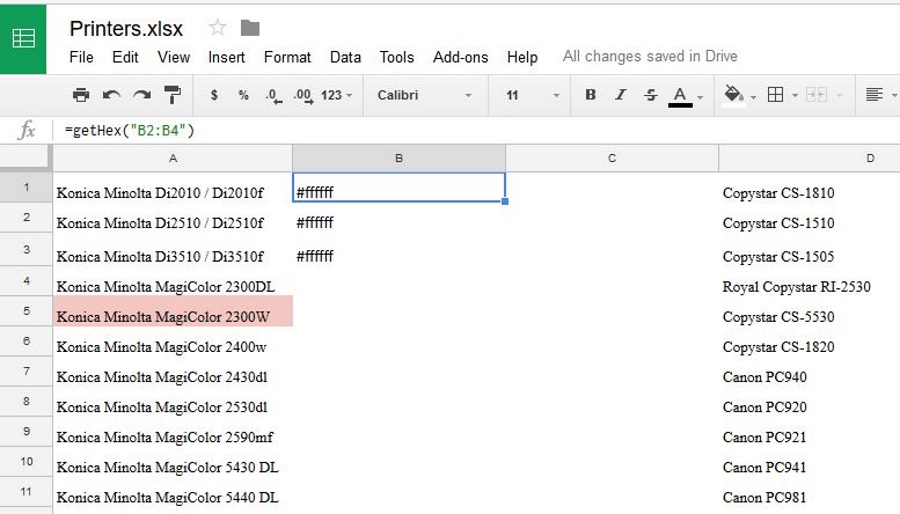

Scrapers will often be slightly customized for your data specifically. They will latch on to a certain div id or class for the title, the 3rd cell in every row for your description, etc. There is an easily identifiable pattern for most scrapers to work with as most data that is coming from the same table, is displayed by the same template. Randomize your div ids and class names, insert blank table columns at random with 0 width. Show your data in a table on one page, in styled divs and a combination on another template. By presenting your data predictably, it can be scraped predictably and accurately.

HoneyPot

This is pretty neat in its simplicity. I’ve come across this method on several pages about preventing site scraping.

- Create a new file on your server called gotcha.html.

- In your robots.txt file, add the following:

User-agent: *

Disallow: /gotcha.html

This tells all the robots and spiders out there indexing your site to not index the file gotcha.html. Any normal web crawler will respect the wishes of your robots.txt file and not access that file. i.e., Google and Bing. You may actually want to implement this step, and wait 24 hours before going to the next step. This will ensure that a crawler doesn’t accidentally get blocked by you due to the fact that it was already mid-crawl when you updated your robots.txt file. - Place a link to gotcha.html somewhere on your website. Doesn’t matter where. I’d recommend in the footer, however, make sure this link is not visible, in CSS, display:none;

- Now, log the IP/general information of the perp who visited this page and block them. Alternatively, you could come up with a script to provide them with incorrect and garbage data. Or maybe a nice personal message from you to them.

Regular web viewers won’t be able to see the link, so it won’t accidentally get clicked. Reputable crawlers(Google for example), will respect the wishes of your robots.txt and not visit the file. So, the only computers that should stumble across this page are those with malicious intentions, or somebody viewing your source code and randomly clicking around(and oh well if that happens).

There are a couple of reasons this might not always work. First, a lot of scrapers don’t function like normal web crawlers, and don’t just discover the data by following every link from every page on your site. Scrapers are often built to fix in on certain pages and follow only certain structures. For example, a scraper might be started on a category page, and then told only to visit URLs with the word /data in the slug. Second, if someone is running their scraper on the same network as others, and there is a shared IP being used, you will have ban the whole network. You would have to have a very popular website indeed for this to be a problem.

Write data to images on the fly

Find a smaller field of data, not necessarily long strings of text as this can make styling the page a bit more difficult. Output this data inside of an image, I feel quite confident there are methods in just about every programming language to write text to an image dynamically(in php, imagettftext). This is probably most effective with numerical values as numbers provide a much more insignificant SEO advantage.

Alternative

This wasn’t an option for this project. Requiring a login after a certain amount of pageviews, or displaying a limited amount of the data without being logged in. i.e., if you have 10 columns, only display 5 to non-logged in users.

Don’t make this mistake

Don’t bother trying to come up with some sort of solution based on the user-agent of the bot. This information can easily be spoofed by a scraper who knows what they’re doing. The google bot for example can be easily emulated. You more than likely don’t want to ban Google.

29 thoughts on “Preventing Site Scraping”

Despite some of the naysayers on Hacker News, automated web scraping and content theft can be prevented (without shutting down the websites, as some joked). Our company Distil (http://www.distil.it) helps protect website content and data, while improving their performance.

One suggestion I’ve read on a couple of the comments is to create a username/password login to protect the content. That often has no impact. You should google the PatientsLikeMe story, where Nielsen created an account and scraped all of the subscribed users data and sold it.

They might deter some novice scrapers, but if a reasonably skilled programmer wants your content, they will get it. Speaking from personal experience, challenges such as the ones you propose simply strengthen my resolve to get the data.

all you wil do is damage the experience of your site for regular users, break your markup, and do very little to prevent any scraper who wants your content

The trick is if someone wants to crawl your content and you want humans to read your content, you can’t make it fool proof without asking something more secure from the user, but if you can make it a bit more expensive for the script kiddies, they might choose cheaper targets…

but for real, unless you implement something that makes it hard to extract the content, anything else is easy to avoid using something like: http://phantomjs.org/

good luck!

1) Ajax paginated data: it’s not hard to just request the page and then see what the links link to. You can use something like phantomjs to execute queries in a browser environment and click on the right links. No matter if you choose to use numbers or random words or anything else for pages, it’s easy to find this out.

2) Randomise template output: slightly annoying but xpath selectors are highly flexible, this would be a bit frustrating but really not that hard to code around. You’d only be selecting the columns you want, when designing your scraper you’d just ignore those. You would however likely be really confusing screenreaders!

3) Honeypot: it’s easy not to click on hidden links.

4) Write data to images: This actually is the most annoying thing for scrapers, although not impossible to get around with open source OCR software, it wouldn’t be 100% accurate and would be slow. However, you’re breaking (a) copy paste – people can’t copy paste from your tables now :( (b) screen readers again (c) Google can’t read your text.

Please please do not break the web; the web is designed to make things like scrapers easy. If you don’t want it shared, your solution is not a technical one. It is either a legal one (sue the copiers) or a business one (don’t show the information for free).

Your clients might not like this, but that’s just a fact of publishing on the internet. In fact, doing this is potentially illegal in the UK and other countries (not sure about US):

I agree completely, I tried to convince them to go behind a login wall, but no success. This is not something I would choose to personally implement as a scraper can get the data one way or another if they really want to.

There have been numerous times that people have put data in a website that really could be more valuable if it were in an API. Scraping is the worst way to make this data accessible, but it’s better than nothing.

Case in point: I was looking for a job several years ago. I picked a handful of companies I wanted to apply to and scraped their help wanted pages to search for keywords related to my field. Every day my bot would look for jobs and email me if one popped up. It is lame that I had to do this, but thankfully they didn’t do anything crazy to make it hard to scrape.

I do fully understand that some people have proprietary information that they really want to keep on their site. I’m afraid it’s probably a lost cause but doing these things you described can help. I just would hate to see the web get even more closed.

By the way, your suggestion for ajax actually makes life easier. It gives the scraper access to your data in a more easily digestible format. Yes, I’ll take JSON, thank you!

The more hoops you jump though to stop people scraping your site, the less time you are working on other more useful/fruitful tasks

1) You have unlimited dollars for bandwidth and CPU. They may be cheap, but they aren’t free, and any allocation of them to serve to scrapers is taking away from actual visitors.

2) The content on your site has minimal value in being original.

If humans can read it, machines can read it.

If you scrape a website for some data, display it on your own website and link back to the original source.

The question is too vague.

Null if statement.

If you want people to see your data, the machines will be able to see it as well.

But creating images on the fly will cause enough pain for someone to think twice and maybe decide that it’s not worth it.

Blocking someone isn’t that effective too, proxy based solution is quite easy to implement. Your best bet would probably be assuming that javascript isn’t processed by the scraper, adding a javascript calculated variable in the parameters and who ever fails gets an exact copy of your page with realistic but incorrect data. Quite evil actually, because it’ll be ages until someone figures that the data is wrong, let alone why it’s wrong.

And I’d probably skip the CAPTCHAs, they’ll just annoy the users…

Good luck :)

They are already supplying fake data to see if they are being scrapped.

Using this fake data they can find all the sites that are using their scrapped data. Congrats we now know who is scrapping you with a simple google search.

Now comes the fun part. Instead of supplying the same fake data to all, we need to supply unique fake data to every ip address that comes to the site. Keep track of what ip, and what data you gave them.

Build your own scrapper’s specifically for the sites that are stealing your content and scrape them looking for your unique fake data.

Once you find the unique fake data, tie it back to the ip address we stored earlier and you have your scrapper.

This can be all automated at this point to auto ban the crawler that keeps stealing your data. But that wouldn’t be fun and would be very obvious. Instead what we will do is randomize the data in some way so its completely useless etc.

Sit back and enjoy

And this would not work if the IP changes every day or more often, or if using multiple IPs. I have yet to see an admin go to that effort to put fake data on anothers website, when they can just delete it from their site and start scraping afresh using a new IP.

Seems like there is a learning curve. Do you have any tutorials you could point me to?

Ultimately, like you say in your post, nothing can totally prevent scraping, just make it a bit harder and a bit less economically viable for the scrapists.

I guess if your data is really that valuable, either sell the database or dont have a public facing website at all.

Track the user (by IP or any other means), if the user request 100 pages in less then a minute = ip ban, if the user requests more than 1000 pages/day = ip ban. Without no html/image voodoo.

Of course this method applies so some certain types of websites.

The only way to overcome this is to use random loading times, and a bunch of proxies. No one has gone that far with our website.

A scraper can then simply request pages at ‘normal user speed’ thus negating all your efforts.

Ah, you might then say that the scraper can then simply rely on this AJAX call to grab the data, which is even easier because they no longer need to parse the HTML! The (partial) solution to this is to have the AJAX call require a key, which is loaded via the initial page load. Having implemented this solution, this then requires the scraper to load the HTML page, then find the AJAX key in the JavaScript (which is more difficult to parse than just straight up HTML), and then use the key to make the AJAX call.

This doesn’t make it impossible to scrape your site, but it certainly raises the bar.

Required a login wouldn’t help much either.

My advice: don’t bother wasting your time on trying to block them, unless whatever is scraping you is really dumb.

But as long as it can be shown in a browser, it can be scraped. Period. Learn to live with it.

And did you know that if you offered a web service and charged us for the data that we’ll take that option 99% of the time? And at the bigger companies, nobody cares how much it costs if it makes it easier.

but yea reffers and user-agent protection…lol that stopped being safe the minute people started suggesting it. also it may help to get into the mind of some of these scrapers(such software as phantomjs, or nodejs web scrapping tools)

– Each cell is a div

– the divs have no classes/IDs

– the divs are output in seemingly random order (e.g. a label cell is not necessarily anywhere near its value cell within the markup)

– each cell is explicitly positioned with inline CSS for the div to create the presentation

It will be more of a pain than usual, but I will get the data. I always get the data.

My precise montra when doing anything scraping related.

I had to scrape a site laid out in a similar (awful) structure and found that in the end what worked best was just regexing the raw text of a segment of the page without any tags.

http://search.cpan.org/~jesse/WWW-Mechanize-1.72/lib/WWW/Mechanize.pm