That Time Apple Told Apple III Customers to Drop Their Computers

Apple’s Steve Jobs was a perfectionist, occasionally to a fault, and a great example of this double-edged sword of a personality trait is the Apple III. We were reminded this weekend by reddit user wookie4747 of the troubled Apple III computer, and the solution offered by some Apple technical reps to customers facing one of the system’s many issues: pick it up and drop it.

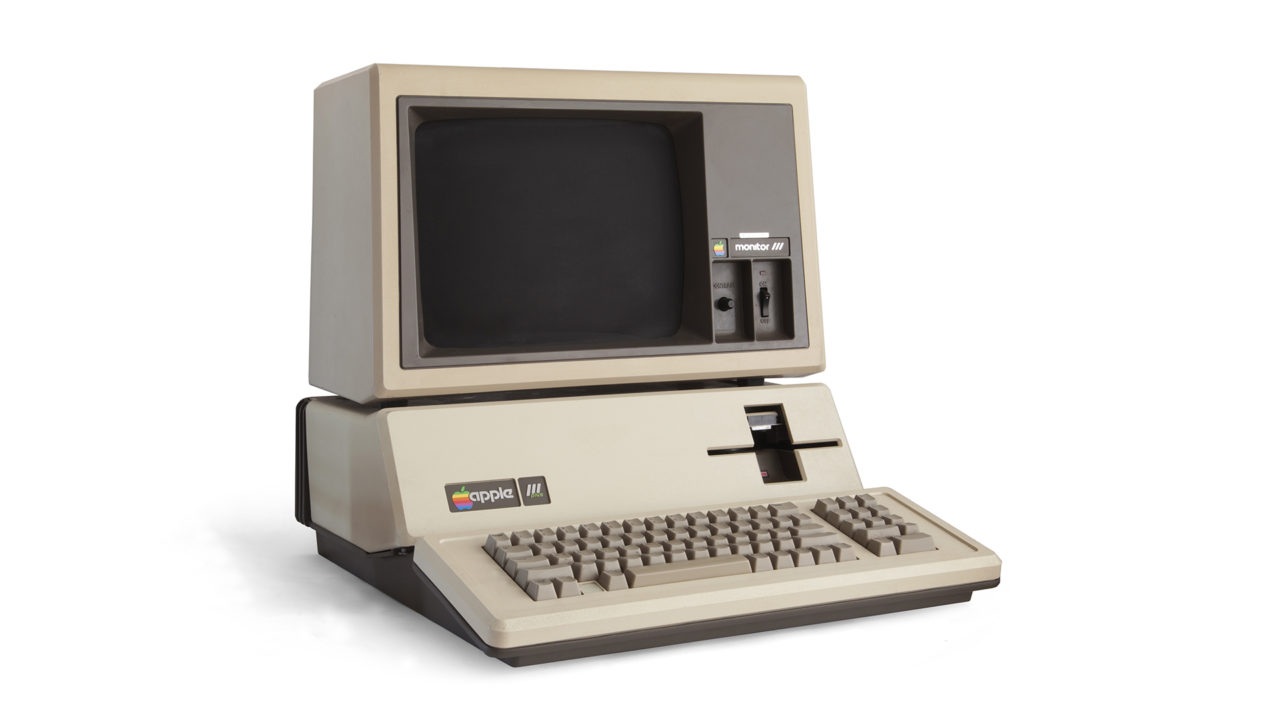

The Apple III was released in 1980 as the company’s answer to the desires of business users who needed more than the venerable Apple II could offer. But a design hampered by the input of the marketing department rather than Apple’s engineers, as Apple co-founder Steve Wozniak explained in his 2007 autobiography iWoz, combined with unreasonable demands from Steve Jobs, produced a product with a plethora of flaws.

One such flaw was overheating, which could cause some of the system’s integrated chips to move or dislodge as they expanded. An obvious solution would be the addition of fans and adequate ventilation, but Jobs hated the relatively loud fans found on many computers of the day, and didn’t want to mar the Apple III’s design with ugly vents. So the Apple III shipped without any fans or vents, relying instead on a design that utilized a massive aluminum heat sink that formed the base of the computer.

Without proper heat dissipation, computer magazine BYTE reported in 1982, the Apple III’s “integrated circuits tended to wander out of their sockets.” Owners of affected systems would begin to see garbled data on the screen, or notice that disks inserted into the system would come out warped or even “melted.”

These problems affected Apple’s own employees, too, with one of the company’s early engineers, Daniel Kottke, picking up his Apple III and slamming it down on the desk out of frustration. To his surprise, the computer “jumped back to life.”

Apple’s support engineers offered Apple III customers a similar solution:

Apple recommended users facing problems with the Apple III lift the computer two inches and drop it, as this would set the circuits back in place.

Apple later modified the internal Apple III design to add additional heat sinks for the logic board, but the system still ran alarmingly hot.

Further design and software tweaks eventually led to a relatively stable Apple III platform, but by that point the system’s reputation was already irreversibly damaged. Apple discontinued the Apple III in April 1984, shortly after the launch of the groundbreaking Macintosh.

While we don’t recommend dropping your modern Macs or iDevices, Apple’s “try dropping it” suggestion from the early 1980s makes the company’s stance on other issues — i.e., “you’re holding it wrong” — far less surprising.

6 thoughts on “That Time Apple Told Apple III Customers to Drop Their Computers”

Which is almost certainly the fact that most home computers had external power supplies. Machines with internal power supplies often had fans. And ALL machines had vents! There were no airtight computers outside of Apple, as far as I know, ever!

Well, the Apple /// had a slot for the disks, but a single slot can’t set up any sort of convection.

The disk drive motors, too, would give off heat during use.

All it needed was a few nice stylish vents. The Atari ST had a lovely design, very minimal and stylish, and the vents were made in such a way as to be barely visible. Just not having any is stupidity of a Jobsian level, and could only have come from someone with his authority. Nobody else could ignore every engineer in the place screaming at him. That, and his famous ability to live in a fantasy world, stubbornly ignoring reality. I believe he was quite proud of that “ability”. Perhaps he didn’t realise “reality distortion field” only applied to his own brain, he couldn’t actually affect reality.

He was a dick. And his trick with the number plates was dickish. And I’m glad he got cancer and hope it hurt.

The processor is at 253 watts. The welder 1,800.

Now absolutely amperage is the rate at which current is flowing, and generating heat due to electrical resistance. But watts are a much fairer explanation of heat generation here.

Many Commodore 64’s had an early demise due to the PLA and SID chips overheating. The C64 was redesigned with an aluminum RFI shield with bent legs smeared with thermal grease that touched each IC that was prone to overheating. A method that works quite well even today with collectors (like me). But damn, was that a cheesily built computer!

Also, other computers from back in those days had reliability problems. And I’ve also heard another version of this story except that it was Atari recommending that you drop the computer instead of Apple.

At the time I was assigned to work on the Apple/// project, Wendell Sander was running the project, later on Tom Root took it over.

Don’t forget that the Apple][ (in all its various flavors) was in full parallel development, and was until well after the Mac was released Apple’s main income producer. And Woz was working during that time on the Apple][ and related projects. Some of that work applied to the Apple///, but others were doing the engineering and software development on that product.

The climbing chips problem was caused by several factors coming together, including the board flex, greater than usual temperature excursions because of the decision to use passive cooling, very tight sockets (which meant roughly that if a chip moved, it wasn’t allowed by the socket to move back on cooling, so it “walked” in one direction), and so on.

Most of the hardware issues were fallouts from management’s “no fans” directive. (Other directives, as mentioned before, crippled the machine in other ways, the crippled Apple][ emulation in particular didn’t make things easier, either the hardware nor software.)

The problem turned out to be pretty simple to fix, and the fix was rolled in to subsequent updates, along with things like replacing the original “fine line” motherboard with the easier to manufacture and more reliable “coarse line” board. Perfect design? No. But pretty minor considering all things, and easy to fix, and it was.

The problem of chips slowly working their way out of their sockets was caused by the motherboard (and the metal tray that it was mounted in) flexing slightly during thermal cycling as the machine was turned on or off. The solution was not to pick up the computer and drop it a couple of inches (given its weight, that could be pretty hard on desks), but to lift up the front of the keyboard an inch or so and release it. And it usually worked, too.

Given chip reliability back then, it was a balancing act between using socketed chips (more cost, risk of chips coming unseated, …) and soldering everything to the motherboard (higher cost of repair if a chip did fail, …), and a manufacturer would take flak either way. Mounting and packaging technologies have come a long way since then; in general things are a lot more reliable, while component-level repair has pretty much gone away.

Some of the Apple///’s marketing decisions had as much or more to do with the product’s failure in the market, with some of the long-term effects on Apple products (there was a very long time that there were no products with the number 3 as part of the name, among other things). One of the features of the Apple/// was that it included an Apple][ emulation mode, which made it possible to run Apple][ software while Apple///-specific applications came to market. Good idea, if it had been done right, which it wasn’t, thanks to the marketing department.

It seems that Apple Marketing was convinced that if the emulation was complete, sales of the $3,000+ Apple/// would cannibalize sales of the ~$1,300, bankrupting the company. Which made no sense at all to those of us in engineering.

So the emulation was intentionally crippled. And we had a cautionary tale for future product developers…

So as usual WOZ is bullshitting.

If the board flexed unseating the chips, it’s because the mountings didn’t allow it free movement as it expanded.

That’s not a result of unreasonable constraints from management, that’s the result of poor design.